Predicting Human Perceptions of Robot Performance During Navigation Tasks

Abstract

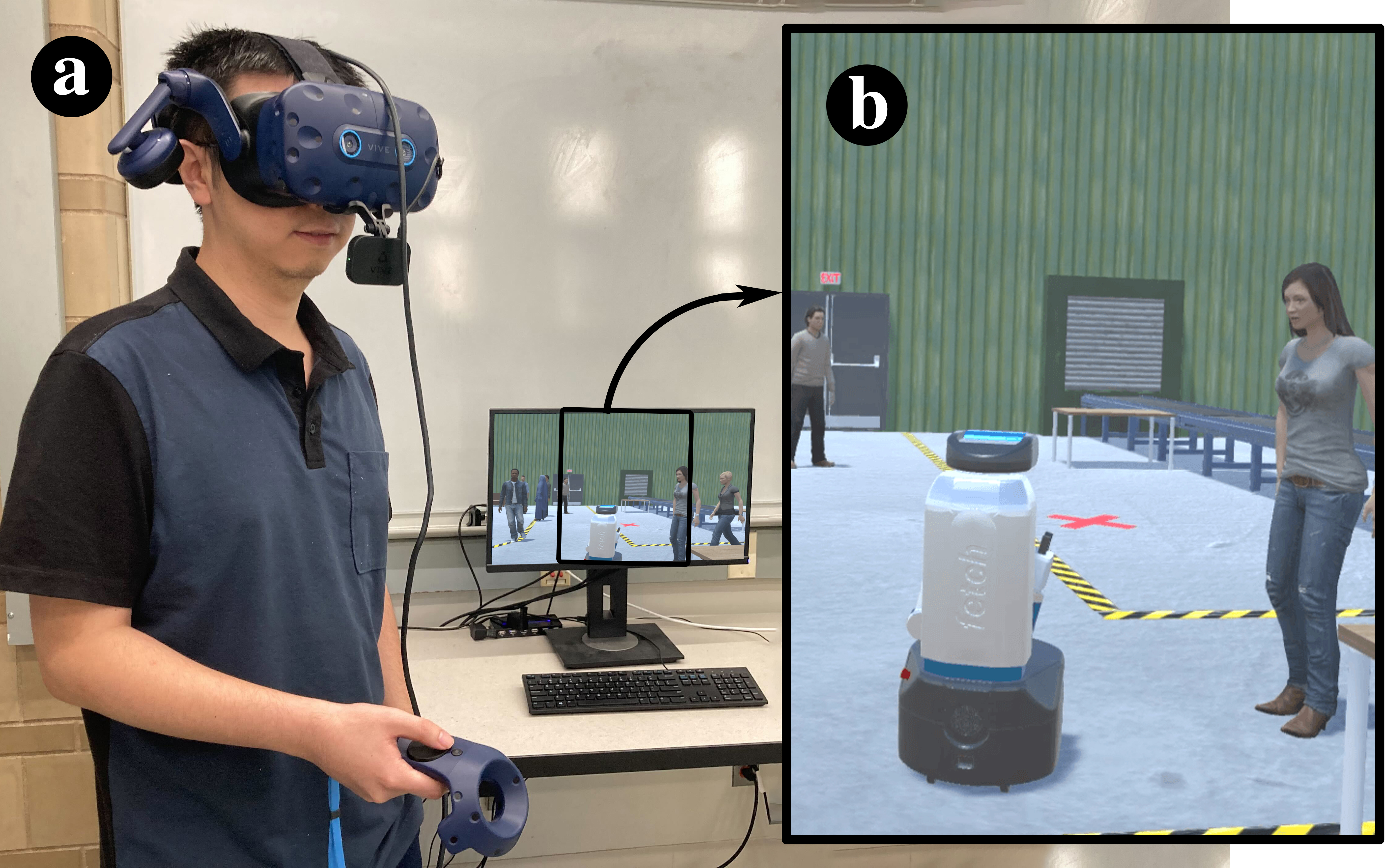

Understanding human perceptions of robot performance is crucial for designing socially

intelligent robots that can adapt to human expectations. Current approaches often rely on surveys,

which can disrupt ongoing human-robot interactions. As an alternative, we explore predicting

people's perceptions of robot performance using non-verbal behavioral cues and machine learning

techniques. We contribute the SEAN TOGETHER Dataset consisting of observations of an interaction

between a person and a mobile robot in Virtual Reality, together with perceptions of robot

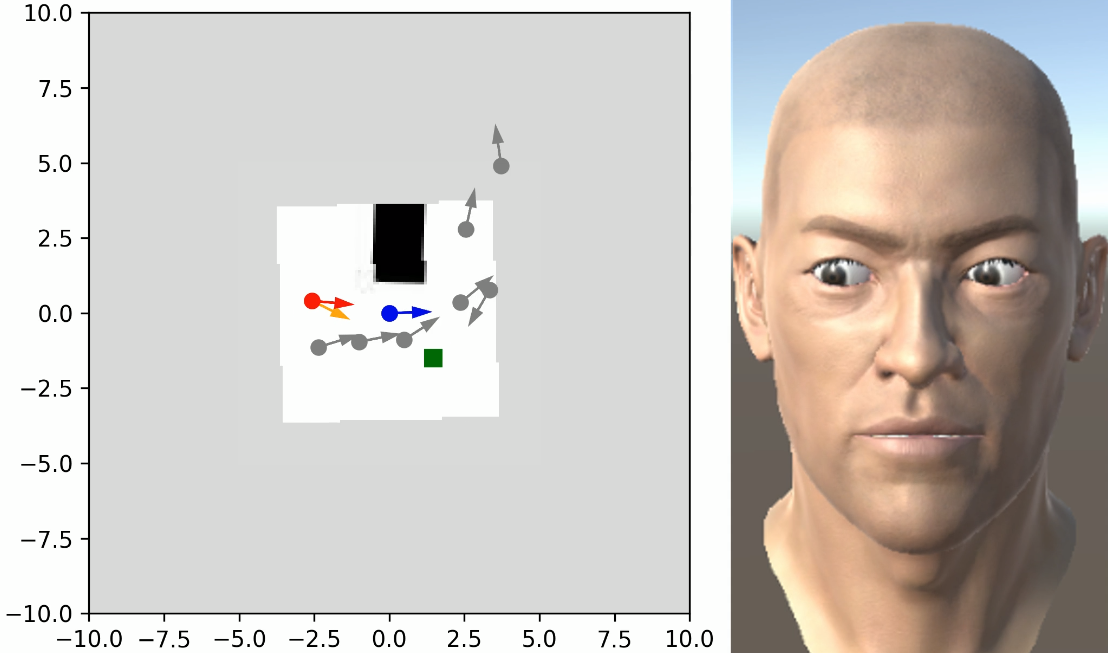

performance provided by users on a 5-point scale. We then analyze how well humans and supervised

learning techniques can predict perceived robot performance based on different observation types

(like facial expression and spatial behavior features). Our results suggest that facial expressions

alone provide useful information; but in the navigation scenarios that we considered, reasoning

about spatial features in context is critical for the prediction task. Also, supervised learning

techniques outperformed humans' predictions in most cases. Further, when predicting robot performance

as a binary classification task on unseen users' data, the $F_1$-Score of machine learning models more

than doubled that of predictions on a 5-point scale. This suggested good generalization capabilities,

particularly in identifying performance directionality over exact ratings. Based on these findings,

we conducted a real-world demonstration where a mobile robot uses a machine learning model to predict

how a human who follows it perceives it. Finally, we discuss the implications of our results for

implementing these supervised learning models in real-world navigation. Our work paves the path

to automatically enhancing robot behavior based on observations of users and inferences about

their perceptions of a robot.

Paper

Dataset

A brief description of the dataset can be found in the

README file.

The zip file containing the VR dataset can be downloaded from

Google Drive at the following link:

The zip file containing the real world demonstration dataset can be downloaded from

Google Drive at the following link:

The source code for our experiments can be found at: